|

Principle of particle physics, Part 2.

|

About 120 years ago, the prevailing atomic model was the "Thomson- Model". W. Thomson and J. J. Thomson had set up a model, whereby the matter in the atom is homogenously distributed all over the spherical atom. Thereby, also the electric charges of the electrons was asumed to be homogenously distributed over the spere. In 1903, Lenard discovered that electron rays can freely move through the atoms, and he concluded that atoms must be essentially empty spaces.

Rutherford wanted to see, how the charges are distributed in the atoms. He exposed a gold foil to alpha particles of a Rhadon source. In 1911 he observed on a phosphorous screen behind the gold foil, how the alpha particles penetrated the gold foil. He expected to find a sort of diffuse glimming of the phosphorous screen, as if the gold foil would be a nebulous abstacle.

The result was completely different to what he expected. Most of the alpha particles penetrated the foil without any deflection. Only some alpha particles were scattered to different directions.

His detailed evaluation convinced him that this finding was contradicting the Thomson atomic model completely. Instead, the experiment confirmed Lenards findings that atoms are virtually empty spaces. The raw events, where alpha particles had been scattered, could only be explained as if the gold foil contained some very hard and rigid lumps, which were very small, and which contained the whole positive charge of the atoms. This led to the atomic model of a pointlike nucleus, which houses the whole matter and the whole positive charge of the atom.

As we all know, this was going to be the fundament of all atomic models in the time to come.

But Rutherford's conclusion was wrong and still is.

As modern physics teaches, the elementary particles have a space distribution, which depends essentially on the energy or the momentum of the particle. We express this as the "wave length" of the particle. And we know that the volume requirement of most elementary particles corresponds to a single wave length. So a slow particle fills a large volume, whereas a high speed particle fills only a small volume.

The word "fills" does mean a sort of space consumption, which corresponds largely with the classical physical law: "The space occupied by one body, cannot be occupied by a second body." However, this meaning has to be qualified in the modern sence as expressed by the Pauli principle, which reads in modern form: Any particle excludes another particle of the same quantum states of penetrating into a volume, which is spanned by the particles "wave length"; however, as soon a particle has a different quantum state, it may intrude into a space, which already houses another particle.

When Rutherford examined the gold atoms whith the alpha particles, the volume of the atomic nuclei particles was necessarily small, because the alpha particles had high energies.

This does, however, not at all contradict Thomson's atomic model, because this model was made for normal physical and chemical conditions, where the energy impact on the atom is smaller by a factor of millions as compared to Rutherfords experiments. Therefore the experiment itself imposed a very short wave length on the atoms of the gold foil, as Rutherford used high energy alpha rays. If he would have used low energy rays instead, he would have observed, what he expected: a diffuse glimming of the phosphorous screen.

This is an important finding, which I realised only due to the ether model: Thomson's atomic model was not so wrong after all. It assigns the charges and masses of the "atomic nuclei" to the whole space of the atom, and this is not disproven by Rutherfords experiment or any newer finding.

As this example shows, the ether model opens the eyes. It allows for viewing the nature from a different perspective, thus making things obvious which otherwhise are obscured.

In my view it is evident that the universe is filled by a matter, which I call "ether" in accordance to the meaning, it had about 130 years ago. The ether is very dense, very hard, and it gives rise to all phenomena, we call physics, by its physical properties.

In contrast to the ether model of the last century, this new ether model attributes all matter and energy to cavities in the ether. And the ether is not homogenous, but it can fracture and build cracks. These cracks may enlargen, thereby assigning an energy field to the space. The energy density, as must be attributed e.g. to conventional elektromagnetic fields, in this model is a real energy field formed by enlarged cracks between the ether bricks.

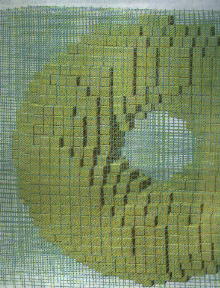

This is schematically shown in the following pictures.

|

As the picture shows, the radial cracks are broadened. The tangential cracks are just shown to clarify the picture; they do not represent any energy content.

It is essential that the cracks decrease as further they are away from the pointlike charge. The gap size must decline in accordance with a 1/r- square law, in older to allow for a radial decline of the energy density with the fourth power of r. This can be possible, e.g. if the radial tension decreases according to 1/r- squared, and if the gap size is proportional to the radial tension. These assumptions are sound, and they are in agreement with classical physics as well for the electric field as for the ether model itself.

This view, however does require that tensions and strains are conducted by the ether. Therefore, the ether cannot strictly be incompressible. It must be regarded as deformable and compressible.

This does not, however, mean that any pressure disturbance propagates in the ether with the speed of light, as was postulated for the ether model of the 19th century. Instead, the compressibility may be much larger, therefore any pressure disturbance and crack formation may propagate at very high velocities.

The speed of light is - on the other hand - responsible for the enlargement of the cracks. So, the propagation of any cavity takes place with the speed of light.

There is a number of classical physical analogons, which enables the model to separate for the decoupling of speed of light (propagation of cavities) and speed of propagation of pressure disturbances in the ether.

|

This picture shows that positive and negative charges generate different "fields", but both have positive energy densities, which decline with the fourth power of r.

|

The cracks have a triangular cross section, with the sharp angle pointing towards the positive charges.

This picture allows to understand the "charge density" of an electric field as a phenomenon, which is very much in line with the existance of real charges. Every conical crack may be understood as a real electric dipole, showing a positive charge and a negative charge to opposite sides.

The energy density is proportional to the base areas of the prismatic cones, which is the cross section of the gaps at the bottom of each brick layer. Since the energy density of an electrostatic field is proportional to the square of the electric field strength, the electric field strength is directly proportional to the square root of the base area, or proportional to the effective linear dimension of the base area. So, the effective dimension of the base area is directly related to the electric field strength.

Furthermore, it may be seen that - due to the proportionality of electric field strength and crack cross section - the electric field strength may be generated by a sort of pressure, which is independent on the field strength itself. This pressure is - on the other hand - directly related to magnetic and nuclear forces in much the same way as with the electric forces.

This picture can also show - in comparison to the picture of the light quantum, which also imbeds a triangular cavity - that light is a phenomenon with a transversal electric force. This gives a particularly interesting clue to the nature of light, and this may answer many questions, which puzzled physicists more than 100 years ago, and which finally contributed essentially to the abandoning of the old ether theory.

The picture may also answer the question as to why we do not realise the electric field of light quanta as long as they propagate. The electric field is limited to the one triangular gap in the forefront of the disturbed area; but when the quantum collides with an obstacle, the disturbance distributes all over the space and thereby uncoveres the electric and magnetic fields.

|

These pictures can also show that an elementary particle, which - according to the model - involves tilting and turning of ether bricks, is essentially associated with electric and magnetic fields.

|

A very interesting aspect to the binding forces came from the vortex crystal model. When two elementary particles align as shown in the picture, the flow dynamical forces can be calculated to be equivalent to about 5 to 10 MeV per nucleon.

The binding energies stay about constant, even if the wave length of the particles increases; thereby the number of vortices increases; so, although the flow dynamical change, the binding energy stays about the same, because the weakening of the binding forces of individual vortex rings is compensated for by the increased number.

The attraction of vortex crystals has an analogon in e.g. two ships travelling alongside, which attract each other by pure flow dynamical effects.

The numerical evaluation showed that this separated elementary particle can be the electron, and its mass is about 1/1800th of the mass of the nucleon. This is, according to my knowledge, the first time that a theroretical description of the ratio of proton mass to electron mass has been made.

All these calculations, using the vortex crystal model, yield results, which include "powers of pi". This results mainly from the fact that the formula for the volume of a vortex ring with circular cross section does contain the circle number "pi" as squared.

Although the vortex crystal model cannot stand on its own, because it does not satisfactorily answer the question, why the elementary length (the Compton wave length of the resting proton) seems to "gouvern" all processes, it can serve to identify some physical processes, which - in the model - might be responsible for some phenomena.

Modern physics has developed new techniques for computing masses of elementary particles. The most elaborate method seems to be the method of "lattice quantum chromodynamics QCD", which can yield numerical results using the formalisme of K. G. Wilson (see Scientific American, febr. 1996, p. 104 ff. in the article of D. H. Weingarten). Extensive computations, as carried out using supercomputers, seem to prove that lattice QCD does include all necessary effects. However, the numerical method still uses some numerical input, in particular to the values of quarks and the energy density of the "chromoelectrical field".

|

I have tried many ways to inlcude something like "quarks" in my modelling. Presently, I favour the idea that quarks may stand for the cracks and gaps in between the ether bricks. The cracks are seen "head on", very much in line with the experimental setup of high energy physics, which monitores "quarks" generally with "head on collisions".

|

There are numerous ways for the particles to crack the forefront ether cube. The simplest way seems to be an abrasion on the rear side, untill eventually only a small remnant of the ether cube tilts away and is sucked into the cavity. This is shown in the centre of the picture.

When the gap opens between two ether layers, there might be an intrusion of ether powder, which has not been included in the picture. The elementary particles may differ quite a lot with respect to the pattern, the gap to the undisturbed ether regions opens, broadens and finally leads powder into the newly generated cavity.

This is particularly interesting in high energy physics, where the quarks have been discovered.

It may also be possible that the ether cube tilts in two directions simultaneously, as shown in the right corner of the picture. The two cases differ with respect to the numer of gaps, which are opened.

The other ways, which are shown, involve a fractioning of the regarded ether brick. In one case, the ether cube breaks up in two parts. The front corner shows an even more complicated case, where the ether brick breaks up in four parts, thereby generating a complex gap pattern.

The simple gap pattern happen more frequently. So the basic quarks (the "up" quark and the "down" quark) may be associated with the more simple gap patterns, whereas the charmed quarks etc. form more komplex pattern. The model allows also for more than just one cube to be involved, when an elementary particle intrudes an undisturbed ether brick layer.

It is important that even the simple case as shown in the centre of the picture, involves four different gaps. Thereby each gap might stand for a "quark". So every process shown in the picture is not a quark itself, but is made up of several quarks.

In fact, we might find a number of different geometrical arrangements, which might have a correspondance in quark physics. So, four ether bricks might form a pattern with four equal "rays", or even noncubic ether bricks might be involved, forming five rays or even more.

There are some physical evidences, which - in my view - make this new ether model necessary and directly contradict the prevailing standard model. The standard model in phyiscs states: The universe is essentially empty. All matter is concentrated on elementary particles, which are virtually pointlike. In fact, there seem to be "proofs" that the elementary particles are exactly pointlike; these proofs are based on the result, that any charged particle would instantly explode, if the charge would be distributed over a physical distance smaller than an elementary length.

The standard model in physics holds further that the universe was generated in a "big bang" about 15 billion years ago and expands ever since. All elementary particles, which are in the present day universe, were generated in a cascade of events, which can be simulated in the high energy accelerators in the great research laboratories on earth. However, the high energy physicists admit, that they can only simulate events, which happened after the first few milliseconds elapsed after the big bang.

Modern physics attributes all forces to virtual particles. So, when one electron imposes an electrostatic force onto another electron, this force is asumed to be generated by a multitude of "virtual photons". A virtual particle differs to the real particles essentially by its life time and energy; there is a correspondance between energy uncertainty of a particle and its life time, which is known as the Heisenberg uncertainty relation. A real elementary particle has an energy, which is large enough, as to allow us to see the particle. A virtual particle, instead, has a small energy content, which is even smaller than the "energy at rest". According to the uncertainty principle, even an infinite observation time would not allow us to observe the particle. It is bound to stay unobserved. Therefore, the particle is called "virtual particle".

This model of virtual particles is the basic idea behind the quantum electrodynamics as developed by R. Feynman and others. As Feynman stated, all elementary particles are embedded in a sea of virtual elementary particles. Still, this model asumed a "physical mass" to be attributed to the "real" pointlike elementary particle itself, and the virtual particles have essentially no mass.

This, however, is not conclusive after all. We do know from classical physics that an electric field has an energy content. And since mass and energy are "the same", the electrostatic force field has a homogenous "mass distribution all over the space" - even ranging to infinity.

Modern physics does see these discrepancies. However, the physicists argue that the solutions to these questions lies in the so called "renormalisation". Thereby all virtual particles have exactly the individual mass of zero; but, since there is an infinite number of virtual particles in any electric or magnetic or any other force field, these infinite virtual particles sum up to a finite energy content of the field. In fact, many very elaborate calculations have been made to "proof" that all of the phyiscal forces we know can be "renormalised". This was possible e.g. with the weak nuclear forces (the force, which controlles the decay of free neutrons) by introducing the so called "intermediate vector bosons W and Z". This is seen in modern physics as a proof that weak nuclear forces are in fact nothing but a special form of electromagnetic forces. (It may be added that the renormalisation concenpt does not satisfactorily work for the gravitational force; all attempts to find an adequate set of virtual interchange particles, which can be renormalized, seem to have failed so far.)

Still, this does not answer satisfactorily, e.g. how the electric field can bear energy without bearing mass. All mass of an electric field of an electron is still asumed to be pinpointed to a pointlike source, the electron itself. As experiments at DESY in Hamburg have shown the physical dimensions of the electron are well below one thousandth of an elementary length.

And, as physics showed, the electron charge must be exactly pointlike; the reason for this being that electric charges of the same kind expell each other. If e.g. the electron would consist of two charges of the same kind, spaced by a distance of, say, one thousands of an elementary length, it would instantly explode. No physical force would be large enough to keep these two charges together, because this compensatory force would in itself represent an energy content, which by far would exceed the energy content of the whole electron. The same consideration would apply if the electron charge would be smeered continously over the phyiscal dimension of the electron.

This is a good exemple for the sort of reasoning, modern physics uses to proof some findings as right, others as wrong. So. e. g. the electron is proven to be pointlike, because it cannot afford the energy in itself to stay stable, if it would have "physical dimensions". As modern physics has grown used to living with paradoxons in the lot, nobody really dares to question the obvious contradictions in itself.

One contradiction of the standard model is - of course - that the electron on the one hand bears the whole charge and mass in one point, on the other hand, the electric field cannot reach to this point. In particular, it had been shown at the beginning of the 20 th century, that the field can only exist outside the distance, which is called the "classical electron radius". The reasoning is that the energy content of the electric field sums up to the electron rest energy, if the integration is performed from a certain radius to infinity. This radius is called the "classical electron radius" and it just happens to be (about) equal to the elementary length or the Compton wave length of the resting proton or a typical dimension of the cross section of many nuclear reactions. Even the high energy collisions of proton pairs result in cross sectional dimensions equal to about one elementary length.

So, the simple consideration as to how near to the electron's location does its field exist, leads to the result that the field can only be defined within a "grid" of one elementary unit length. The ether cube model does stay in line with findings of very tiny dimensions of electrons (just the minute gap size between ether bricks) and - on the other hand - the necessity of the electric field to be "separated" from the electron itself by the classical electron radius at least.

The question is, how do we setup the ether model. The question cannot be: "Are there any proofs or disproofs for the ether model".

date of last issue: 14. 4. 1997